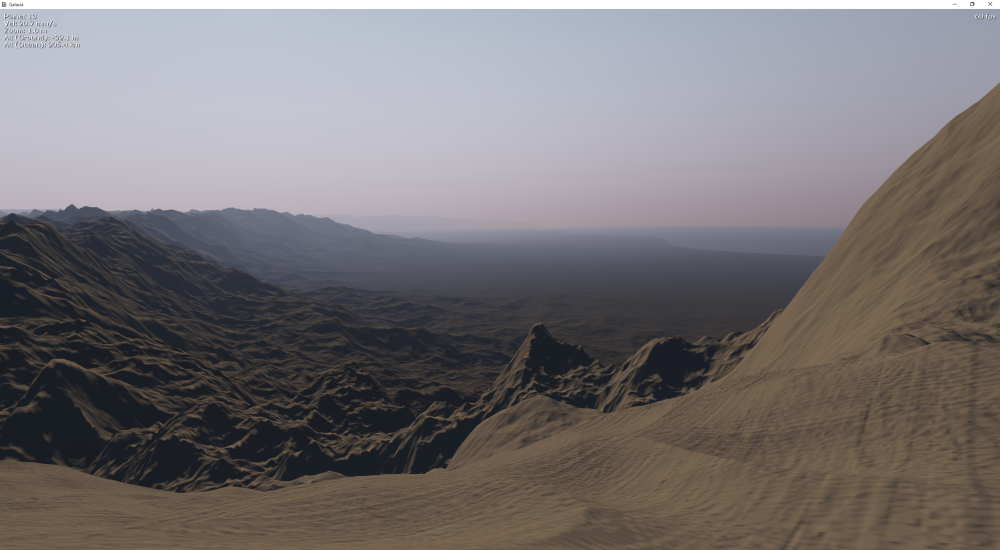

So I’ve just spent the last two weeks building this terrain engine. It’s the second approach I’ve tried in this project for creating a realistically sized planet surface with resolution high enough to walk around on.

The first approach I tried was a projective grid, where a fixed grid is spread over the terrain in the screen space, as described somewhere in this thesis. This results in the vertices “sliding” over the terrain, causing quite a bit of wibbly-wobbliness in areas where the terrain is undersampled. The effect can be quite distracting when the camera is moving, and I spent a considerable amount of time earlier this year playing with my terrain generation to avoid the undersampling. In the end I had something that was acceptable for relatively distant terrain. The main problem with this method appeared when trying to get the grid to have enough resolution in the foreground. The issue is due to the possible height range of the terrain – on my test planet at about 10x the diameter of Earth, the terrain height range is something like 300km. So to project the grid, it has to assume that the nearest grid row could be 300km away at all times since the actual terrain height is not known until it is evaluated on the grid. And the field of view at 300km is quite large, meaning that the nearest grid row has to be spread very wide, nowhere near enough to provide adequate resolution up close. The method could possibly be improved by precomputing the nearest distances or tweaking projection matrices but I concluded that the best use for this grid method was for ocean rendering, where wave motion somewhat negates the vertex sliding effect and the min/max height/tidal range is small (maybe 100m for this huge planet).

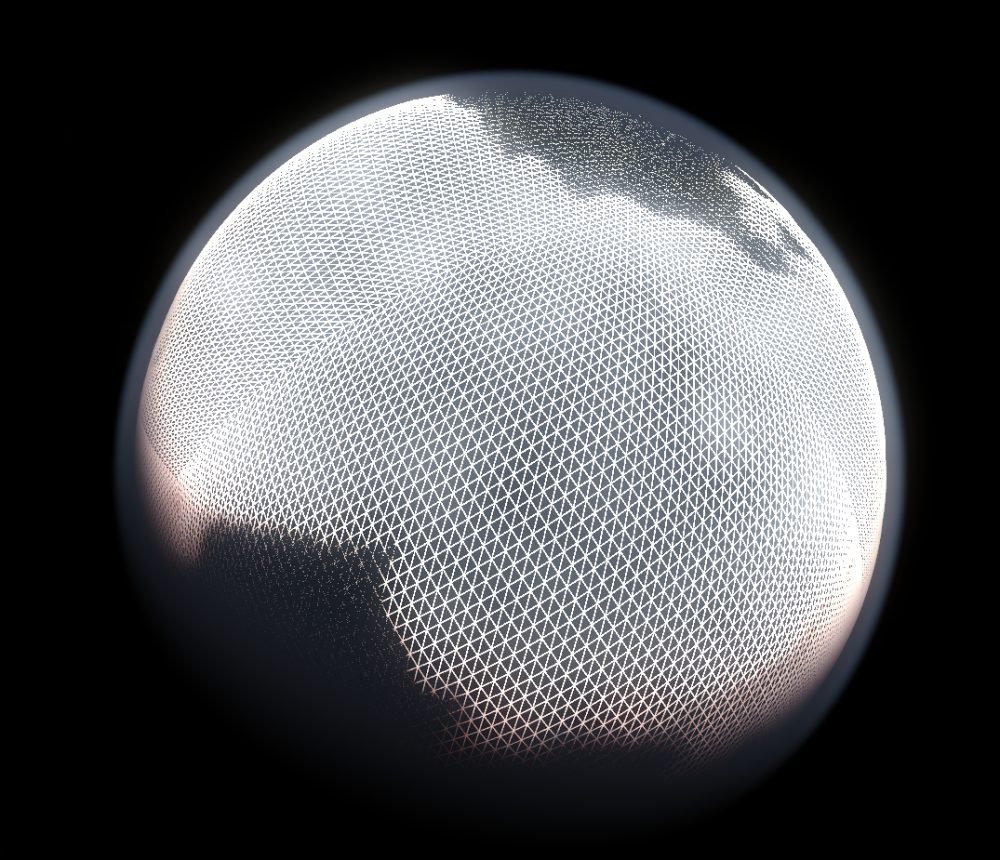

I went googling for a few days and this approach using quadtrees is what I decided to implement. Using quadtrees is pretty much standard for terrain rendering, but special considerations need to be made when they are used on a spherical surface. For example, since the simplest way to completely cover the sphere surface is to project a cube onto the sphere, nodes near the cube corners tend to become quite stretched and can be much smaller than nodes of the same level in the middle of a cube face. But that’s not so much of a problem if the nodes are split depending on their size.

Floating-point precision is always an issue when dealing with such a large range of scales. Since a consumer GPU can only efficiently work with 32-bit floats, terrain generation on the GPU is limited in precision. Using 3D noise functions with floats, for this 10x Earth-sized planet the resolution is limited to something like 100m in the horizontal plane, and more than 1m vertically. This isn’t really ideal for walking around on as everything becomes big noisy blocks (maybe for Minecraft…)

So to smooth things out, I decided to use a bicubic patch system, where quadtree nodes smaller than a certain size are represented by a bicubic surface, which is computed from the surrounding 16 data points. I’ve done this in 2 passes on the GPU – first to compute a height grid for the patch and second to compute the bicubic patch grid from the height data. Then when a higher detail node is generated, it uses the bicubic surface to smoothly place its vertices. A second level of terrain detail is then added in on top of the smooth bicubic surface, allowing for the smallest terrain details, and no blocky-ness.

Implementation wise, I cache the computed height data in VRAM for each patch for re-use in subsequent frames. Each patch also has a normal map generated which is the source of all the surface normals. The normal maps are higher in resolution than the terrain grids, adding extra visible details. This also means that more data points need to be computed and temporarily stored in VRAM. But in this case, the floating-point precision is not so much of an issue, and just 16 bits per data point are used. The normal map is in R8G8B8A8 format, currently alpha is unused. All the patch normal maps are 128×128 and cached in a single normal map texture which is 4096×4096, allowing 1024 normal maps to be cached in a single 64MB texture. In the future, this will probably have to be modified to double up a pixel around the edge of each normal map, to stop adjacent data bleeding through at the edges (it produces a noticeable artifact).

On my system (i7, GTX780), drawing 500 patches (using instancing to reduce draw calls) doesn’t seem to be a problem. Frustum culling is already in use but the performance could probably be vastly improved by adding occlusion culling (it’s on the to-do list!). Note that efficient culling means that the approximate patch centers and radii have to be precomputed on the CPU, so there needs to be two versions of the terrain generation algorithm – one for CPU and one for GPU. This is quite annoying since they both need to produce exactly the same output. But it’s not a new problem in this program, since many procedurally GPU rendered things in the universe also need some sort of matching CPU representation. So I have spent a fair bit of time creating many different noise functions with matching CPU and GPU versions.

Oh, and I’ve implemented some rudimentary cross-fading between detail levels, so there’s not much popping of LODs. There’s still the odd hole in the terrain appearing and the odd LOD pop along node edges, but I’m quite satisfied with how it looks for now. Will upload a new video soon.

So, next on the list is to generate surface colours and more surface diversity. Many ideas on how…

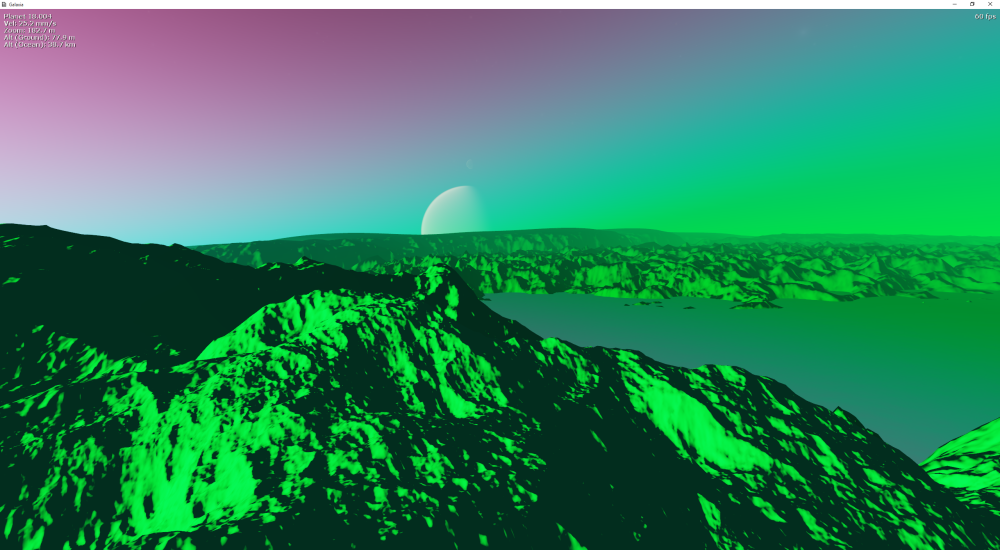

And I’ll finish this with a planet that I found while flying around the universe testing that really deserves to have procedurally generated unicorns. Sadly I did not make a note of which galaxy or star system this was in, so I won’t be going back there any time soon…

Amazing,this is one of the most realistic terrains Iv’e ever seen.

LikeLiked by 1 person