The current raycasting approach to rendering galaxies in Galaxia is already pushing the limits of what is possible with the technique, and the result is somewhat messy and low in detail, in particular the aliasing artifacts are very noticeable. So I’ve been thinking about a different approach to rendering galaxies for a while now, with the aim of better performance, smoother camera motion response, and hopefully the ability to use an octree for LOD purposes.

Most engines that need to render galaxies these days seem to take a particle-based approach, by drawing hundreds of sprites with additive blending, much like how fire effects are usually done in games. The technique does have some drawbacks though, for example the additive blending means that you can’t have light “blocking” particles like smoke or dust interleaved with the light source. It’s not really a problem for rendering fire, but in a galaxy, the dust can appear both in front and behind areas that are emitting light. But perhaps this problem can be worked around by rendering in multiple passes.

So I decided I would try this particle approach for the galaxy rendering. Instead of drawing the particles as billboards though, I’ve decided to try drawing them as inverted cubes. Each pixel drawn for the particle cube will find the integral of the light density for the ray passing through the cube, allowing some simple noise addition. In the screenshot, 16384 randomly positioned solid shaded cubes arranged in a plane are blended into a 256×256 texture to produce the “cloud” effect. Looking at it closely, the cube edges are just visible.

Next step is to remove the visible cube edges with the ray casting, starting by finding the intersection of the ray with a sphere the same size and position as the cube. Once that is done, the integral of the light density along the ray needs to be found. There would be a number of ways to do that, but simple is best in this scenario. Some maths might need to be done…

I found a neat approach to how to arrange particles in a galaxy-like way which I think will be a good starting point for this new approach. Placing the particles on ellipses which are slightly offset from each other I think may allow for the possibility of galaxy collisions, which are something I would really like my new method to be able to do.

That’s all for now. Time to write some more code!

(A few hours later…)

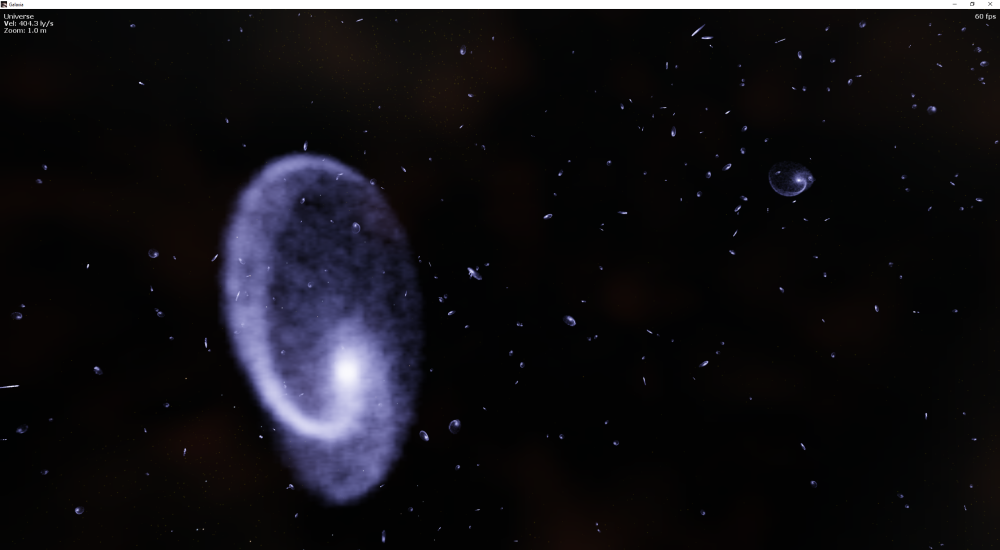

Well I think the elliptical spirals work pretty well! The performance is still much better than the old version, but there’s still a lot more details to add. I do really like how that barred spiral pattern appears as if by magic when just incrementally rotating the concentric ellipses. The even cooler thing about this is that this galaxy could animate in realtime and look somewhat realistic! My stars system won’t be able to handle that sort of thing very well, but it’s definitely something I am going to play with.

(…Later…)

As I suspected, incrementally shifting the positions of the ellipses results in some nice asymmetry. Tweaking the axis of the ellipse rotation can also produce a “warping” effect, and I’m thinking that if I can create a second set of ellipses and align them all correctly, that might be able to produce something resembling a galaxy collision. Since galactic collisions are an important feature of the universe, they are something noticeably missing from Galaxia. Hopefully it can be done this way!

(…)

Incrementing the ellipse rotation angle by a much larger amount results in denser spirals, producing a whirlpool effect… And delaying the angle increments results in much more prominent bars.

(…Playing around with “warping” parameters…)

Some misplaced particles in their cubic form are seen very faintly after warping the outer ellipses. Warping is done by changing the ellipse rotation axis such that it no longer aligns with the galaxy’s rotation axis.

(…)

Some interesting spiral arm patterns resulting from warping.

Here’s the DX11 compute shader that produced this pattern:

RWStructuredBuffer Outputs : register(u0);

cbuffer Galaxy2CSVars : register(b0)

{

uint Index; //galaxy cache index.

uint ParticleCount; //number of particles (not yet used)

float Pad0; //not used

float Pad1; //not used

GalaxyData Galaxy; //the galaxy data, not really used yet

}

[numthreads(128, 1, 1)]

void main(uint3 gtid : SV_GroupThreadID, uint3 gid : SV_GroupID)

{

uint pid = gid.x * 128 + gtid.x; //the particle index in this galaxy.

uint oid = Index + pid; //the output data index in the particle buffer

float4 rnd = RandVec4(pid);

float size = Galaxy.Size.x * 0.025 * (rnd.w*rnd.w+0.5); //randomize the particle size.

float ecc = 0.7; //inverse ellipse eccentricity (circle scaling factor)

float rot = 23.5; //spiral rotation amount / "twistiness"

float off = 0.03; //linear z offset for ellipses - asymmetry factor

float bar = 0.3f; //bar size

float2 warp1 = 0.3; //pre-rotation warp vector

float2 warp2 = 0.01; //post-rotation warp vector

float erad = rnd.x; //size of the galactic ellipse

float theta = rnd.y * 6.283185307f; //angle around the ellipse

float height = rnd.z; //distance from the galactic plane

float2 pxy = float2(cos(theta), sin(theta))*Galaxy.Size.x*erad; //make a circle.

float3 pos = float3(pxy.x, height*Galaxy.Size.y*0.1, pxy.y); //place the circle.

float3 gax = float3(0, 1, 0); //rotation axis for ellipses

gax.xz += erad*warp1; //warp the rotation axis

float angl = saturate(erad-bar)*rot; //angle to rotate the ellipse

gax.xz += erad*angl*warp2; //also warp the axis by the angle amount

float4 q = qaxang(gax, angl); //using quaternions here to orient the ellipses

float4 qi = qaxang(gax, -angl);

pos = mulvq(pos, q); //this line isn't really necessary since it's just rotating a circle

pos.x *= ecc; //turn the circle into an ellipse

pos.z += erad * Galaxy.Size.x * off; //offset ellipses to make some asymmetry

pos = mulvq(pos, qi); //rotate the ellipse to where it should be to make spirals

Galaxy2Particle particle;

particle.Position = pos;

particle.Size = size;

particle.Colour = float4(1,1,2,1)*0.01; //it's just blue for now

Outputs[oid] = particle;

}

And the functions used:

Texture2D g_txRandomByte : register(t0); // permutation random byte tex

SamplerState g_samWrap : register(s0); //permutation sampler (wrap)

static const float g_TexDims = 256.0f;

static const uint g_TexDimu = 256;

static const uint g_TexDimSq = 65536;

static const float g_InvTexDims = 1.0f / g_TexDims;

static const float g_HalfTexel = g_InvTexDims * 0.5f;

float GetPermutation(float2 texcoord)

{

return g_txRandomByte.SampleLevel( g_samWrap, texcoord, 0 );

}

float4 RandVec4(uint i)

{

//This has quite a few perhaps unnecessary calculations but it's like this

// to exactly match the CPU version. I'll rewrite both versions some time.

uint i4 = i*4;

uint nx = i4%g_TexDimSq;

uint ny = (i4+1)%g_TexDimSq;

uint nz = (i4+2)%g_TexDimSq;

uint nw = (i4+3)%g_TexDimSq;

float x = GetPermutation(float2((float)(nx%g_TexDimu)*g_InvTexDims, (float)(nx/g_TexDimu)*g_InvTexDims)+g_HalfTexel);

float y = GetPermutation(float2((float)(ny%g_TexDimu)*g_InvTexDims, (float)(ny/g_TexDimu)*g_InvTexDims)+g_HalfTexel);

float z = GetPermutation(float2((float)(nz%g_TexDimu)*g_InvTexDims, (float)(nz/g_TexDimu)*g_InvTexDims)+g_HalfTexel);

float w = GetPermutation(float2((float)(nw%g_TexDimu)*g_InvTexDims, (float)(nw/g_TexDimu)*g_InvTexDims)+g_HalfTexel);

return float4(x,y,z,w);

}

float3 mulvq(float3 v, float4 q) //rotates a 3D vector by a quaternion.

{

float3 result;

float axx = q.x * 2.0;

float ayy = q.y * 2.0;

float azz = q.z * 2.0;

float awxx = q.a * axx;

float awyy = q.a * ayy;

float awzz = q.a * azz;

float axxx = q.x * axx;

float axyy = q.x * ayy;

float axzz = q.x * azz;

float ayyy = q.y * ayy;

float ayzz = q.y * azz;

float azzz = q.z * azz;

result.x = ((v.x * ((1.0 - ayyy) - azzz)) + (v.y * (axyy - awzz))) + (v.z * (axzz + awyy));

result.y = ((v.x * (axyy + awzz)) + (v.y * ((1.0 - axxx) - azzz))) + (v.z * (ayzz - awxx));

result.z = ((v.x * (axzz - awyy)) + (v.y * (ayzz + awxx))) + (v.z * ((1.0 - axxx) - ayyy));

return result;

}

float4 qaxang(float3 ax, float ang) //creates a quaternion from axis and angle.

{

float ha = ang * 0.5f;

float sha = sin(ha);

return float4(ax.x * sha, ax.y * sha, ax.z * sha, cos(ha));

}

(Perhaps quite a few optimisations could be made! And sorry about the formatting, maybe I should have chosen a more code-friendly blog host…)

… Smoothing out those cubic particles:

The sharp noisy particle cube edges are eliminated by some ray maths. Here’s the vertex shader for the particle cubes that generates the per-vertex ray data for the pixel shader:

StructuredBuffer Inputs : register(t0); //from the CS

cbuffer Galaxy2VSVars : register(b0)

{

float4x4 ViewInv; //(not used in this version)

float4x4 ViewProj; //camera view-projection matrix

float4 GalaxyPosition; //relative to the camera

float4 GalaxySize; //in universe units

float4 GalaxyOrientation; //(quaternion)

float4 GalaxyOrientationInv; //(quaternion)

float2 OutputOffset; //offset for render to impostor

float2 OutputScale; //scaling for render to impostor

float Pad0;

float Pad1;

uint Index; //galaxy cache index

uint ParticleCount; //number of particles

};

struct VSOutput

{

float4 Position : SV_POSITION;

float4 Colour : COLOR0;

float4 Data : TEXCOORD0;

float3 Direction: TEXCOORD1;

float3 RayEnd : TEXCOORD2;

float3 RayStart : TEXCOORD3;

};

VSOutput main(uint iid : SV_InstanceID, float4 pos : POSITION)

{

VSOutput output;

Galaxy2Particle particle = Inputs[Index + iid];

float size = particle.Size;

float fade = 1.0;

float shine = 2.0;

float3 re = pos.xyz * size;

float3 fpos = particle.Position + re;

float3 cpos = GalaxyPosition.xyz + mulvq(fpos, GalaxyOrientation);

float3 dir = mulvq(cpos, GalaxyOrientationInv);

output.Position = mul(float4(cpos, 1.0), ViewProj);

output.Position.z = output.Position.z*output.Position.w*0.0000000000001;

output.Position.xy += (OutputOffset*output.Position.w);

output.Position.xy *= OutputScale;

output.Colour = particle.Colour;

output.Data = float4(size, 0, fade, shine);

output.Direction = dir;

output.RayEnd = re;

output.RayStart = cpos;

return output;

}

The vertex shader is run with the inverted cube geometry, with position coordinates ranging from -1 to +1. DrawIndexedInstanced is used, with the instance count equal to the number of particles, in this case 16384.

And here’s the pixel shader that goes with it:

cbuffer Galaxy2PSVars : register(b0)

{

float4x4 ViewProj;

float4 Magnitude;

};

struct PS_INPUT

{

float4 Position : SV_POSITION;

float4 Colour : COLOR0;

float4 Data : TEXCOORD0;

float3 Direction: TEXCOORD1;

float3 RayEnd : TEXCOORD2;

float3 RayStart : TEXCOORD3;

};

float4 main(PS_INPUT input) : SV_TARGET

{

float4 c = input.Colour;

float size = input.Data.x;

float fade = input.Data.z;

float shine = input.Data.w;

float3 dir = normalize(input.Direction);

float3 re = input.RayEnd;

float3 cpos = input.RayStart;

float tx = (dir.x>0.0?size+re.x:size-re.x)/abs(dir.x);

float ty = (dir.y>0.0?size+re.y:size-re.y)/abs(dir.y);

float tz = (dir.z>0.0?size+re.z:size-re.z)/abs(dir.z);

float t = min(min(tx, ty), tz);

float dist = length(cpos);

float dt = (dist<t?dist:t);

float3 rs = re - (dir * dt);

float hts = 0;

float hte = 0;

bool hit = RaySphereIntersect(rs, dir, size, hts, hte);

if (!hit) discard; //didn't hit the sphere!

float rlen = hte - hts;

float rlenn = 0.5 * rlen / size;

//scale by the square of the relative ray length to approximate

//the light density integral over the ray through the sphere

c *= saturate(rlenn*rlenn);

return c;

}

And the RaySphereIntersect method used here (probably could be improved):

// Returns the near intersection point of a line and a sphere

float NearIntersection(float3 pos, float3 ray, float distance2, float radius2)

{

float B = 2.0 * dot(pos, ray);

float C = distance2 - radius2;

float det = max(0.0, B*B - 4.0 * C);

return 0.5 * (-B - sqrt(det));

}

// Returns the far intersection point of a line and a sphere

float FarIntersection(float3 pos, float3 ray, float distance2, float radius2)

{

float B = 2.0 * dot(pos, ray);

float C = distance2 - radius2;

float det = max(0.0, B*B - 4.0 * C);

return 0.5 * (-B + sqrt(det));

}

//finds whether a ray hits a sphere, and the start and end hit distances

bool RaySphereIntersect(float3 s, float3 d, float r, out float ts, out float te)

{

float r2 = r*r;

float s2 = dot(s,s);

if(s2<=r2) { ts = 0.0; te = FarIntersection(s, d, s2, r2); return true; } ts = NearIntersection(s, d, s2, r2); te = FarIntersection(s, d, s2, r2); return te>ts && ts>0;

}

Since this method finds the distance that the ray has travelled through the sphere (accounting for the camera position!), it produces a nice smooth effect as the camera moves through it, without the aliasing issue that plagues the old implementation. However, dust clouds are still somewhat problematic with this method, and some “fakery” will need to be done to make them look good.

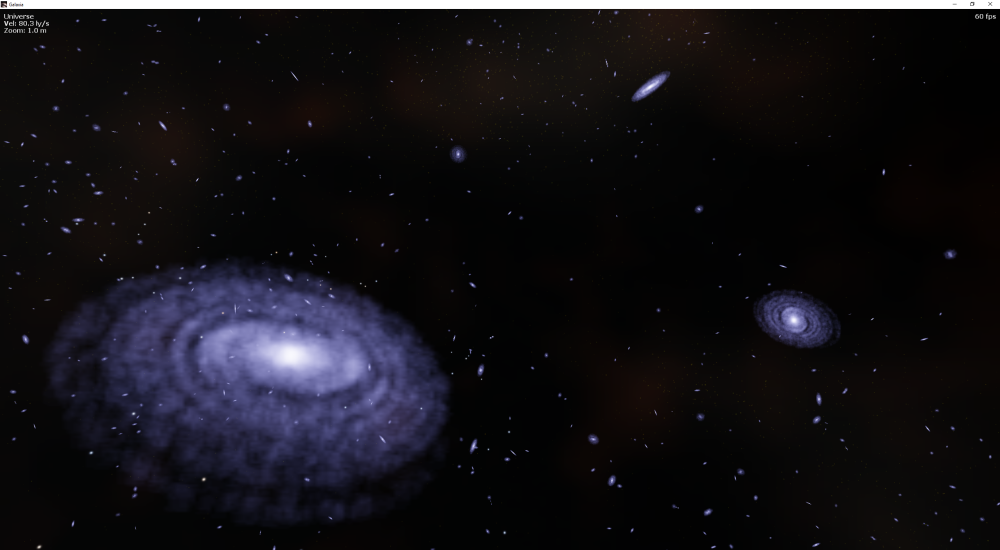

The video shows this galaxy effect from different angles. I think it’s probably just about time for some better colours…

(… The next day…)

Added some colouring using the same code I used to colour stars, based on temperature. I assumed that the temperature of stars is lower near the centre bulge due to the stars being older there, and observations show that the bulge tends to be more yellow/red in colour.

I also was playing with making the spiral arms branch out as can be seen in many spiral galaxies. It probably needs to be a fractal thing though, since the arms could split many times along their length.

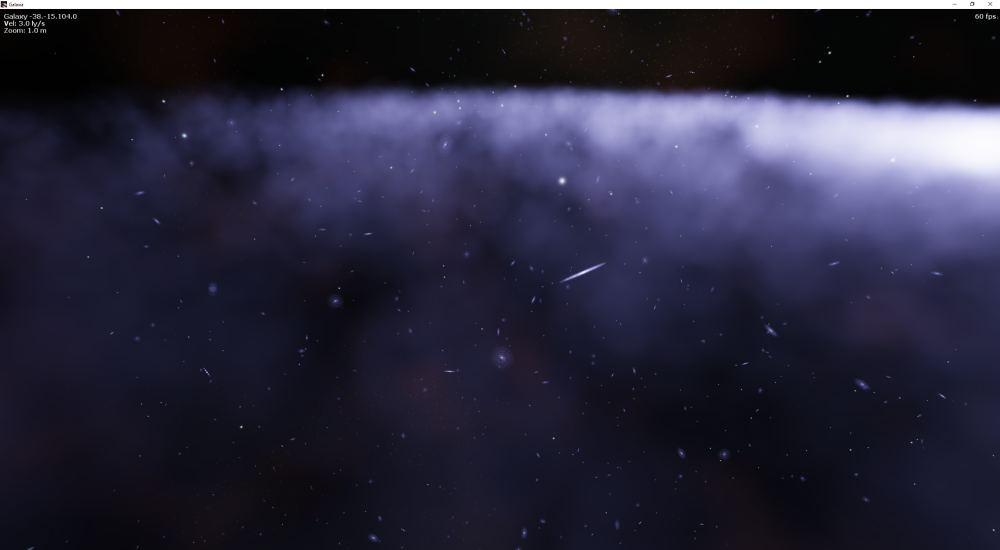

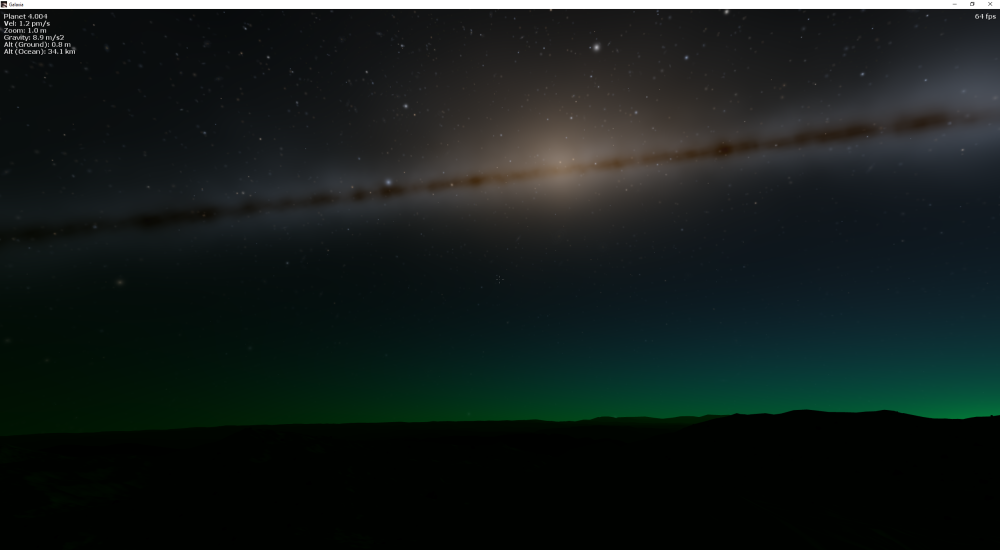

Here’s some pics of the colour distribution from planet surfaces, I think it looks similar to what we see the Milky Way Galaxy as from here on Earth (minus the dust clouds because I haven’t tried implementing those yet).

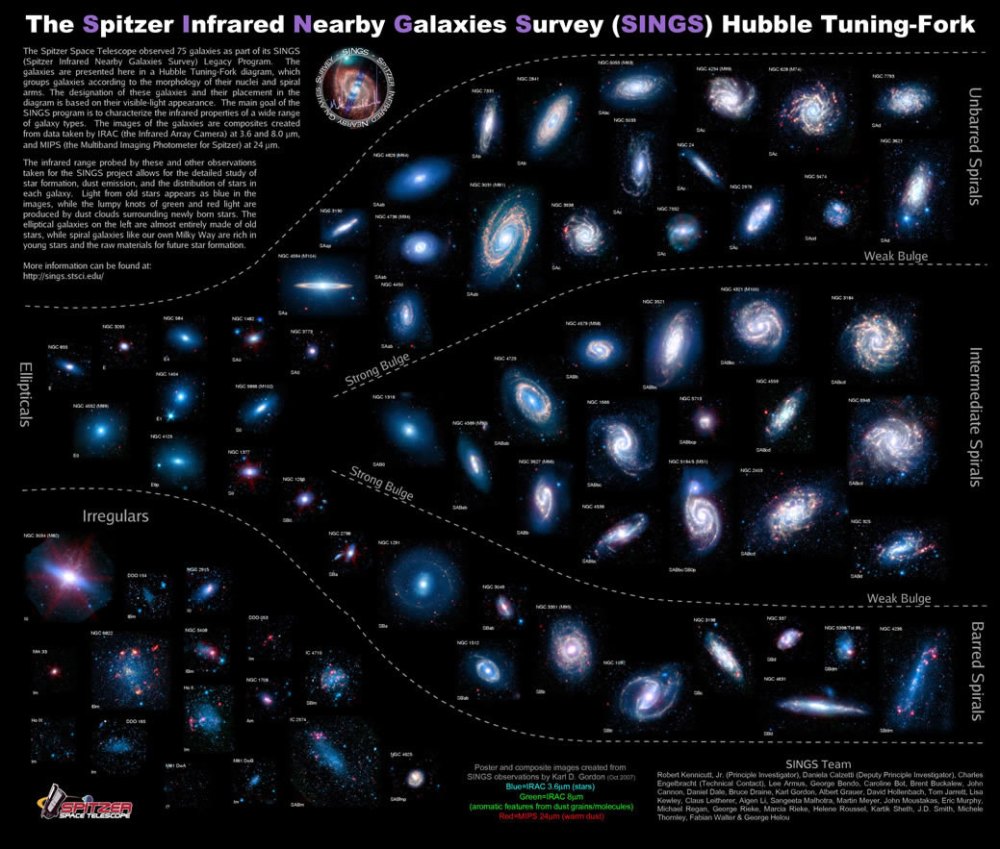

The galaxy shape variation is turning out to be a challenging thing, especially to get them shaped like real galaxies. The Hubble Tuning Fork, or the Hubble Sequence is used by astronomers to classify galaxies, so I think I will start there.

Still figuring out the best way to implement that. Probably with variables such as “bulge size” and “bar size”. My original implementation had those but in a different scale and distribution. But either way, I still need to work on getting the whole range of galaxy shapes out of the algorithm. And I’ll also be working on the dust!

(Another day later)

So I was playing around with adding some dust. I figured the easiest starting point is to just add some particles in with subtractive blending. But for some reason I was getting ridiculous results with the D3D11_BLEND_OP_SUBTRACT blend state, so I decided to just use additive blending and add negative colours. I also had to make sure the final output has the colour value above zero, and alpha between 0 and 1. Since the galaxy is being rendered into a low resolution texture currently, when finally outputting that texture to the screen the pixel shader just makes sure values are in the correct range. Note this would only work with the floating-point render target and texture.

So the dust I think is looking fairly good already, it reminds me a lot of the Elite:Dangerous galaxy. I suspect they are using a similar method.

I’ll be playing with the dust a fair bit more yet. I still want those high detail “wisp” effects that are often seen in galactic dust. The implementation is not currently using any LOD scheme, so I think this first low-res version can serve as a basis where higher detail dust can be “layered” on top when the camera is closer to it.

(Some much darker dust)

(More dust testing and fiddling – planet with moon)

Final update:

It was suggested that I try using a technique called weighted blended order independent transparency (WBOIT) to solve the render order problem for the dust clouds. This now requires that I use two render targets when rendering the low-res galaxy image, and then using both those textures to composite the final image. I based my implementation off some code I found here. It took a fair while of playing with it before I had a good understanding of how its limitations, perhaps I’ll write more about WBOIT in a future article. I still have some slight blending issues with dust using this method though, mainly at the moment it makes the spheres much more obvious than they were due to each particle now making a larger contribution to the end result.

I think the results are quite good but still more work needs to be done to get the higher detail dust lanes to appear. It’s a particularly difficult problem though due to the complex volumetric nature of the dust. I have noted that in engines that render galaxies in high detail, usually billboards are used with a wispy dust lanes type texture to provide the detail. Unfortunately though the illusion breaks when leaving the plane of the galaxy, since the orientation of billboards has a singularity in the calculations leading to the effect of billboards appearing to “spin”. I still need to do more research on this.

I have also implemented elliptical and irregular galaxies, and adjusted the galaxy sizes to be more realistic. Irregular galaxies were achieved by simply using some high warping factors when positioning the particles. Elliptical galaxies have much less dense dust on average and their bulge size parameter is much larger. The temperature range for the glow effect is also set much lower on ellipticals, resulting in a much more yellow colour overall.

Watch the video about the new galaxy types:

Great! Could use these approaches to:

http://martindevans.me/game-development/2016/01/14/Procedural-Generation-For-Dummies-Galaxies/

http://blog.rabidgremlin.com/2015/01/14/procedural-content-generation-creating-a-universe/

http://www.gamedev.net/blog/73/entry-1952708-galaxy-generation/

LikeLike

I remember watching that video many years ago… 🙂

I’ll take a read of these blogs, thanks!

LikeLike

I think this approach would be a good choice.

https://en.wikipedia.org/wiki/Barnes–Hut_simulation

https://github.com/MateusZitelli/barnes-hut-aed2

LikeLike

Sorry I think I replied to the wrong comment, see further down.

LikeLike

Sadly I don’t think it would be possible to have the galaxies generated based off an n-body simulation because it would just take too much computing/time to evolve them to an “old” state. Galaxia needs to be able to generate the galaxies in a fraction of a second, and to get an n-body simulation to look like an interesting galaxy collision takes a very large number of iterations. The older the galaxy, the more iterations it would need to produce hence much longer computing time. All approaches I use for procedural generation have the requirement that very little or no iterative processes like simulations need to be done to produce the result. This needs to be done to make flying around the universe in such a manner possible, unfortunately. If there were infinite computer power however I would definitely be doing n-body simulation!

LikeLike

Yeah realtime would be impossible. But you could generate a few colliding ones in each galaxy cluster and use them to get something looking cool. The points used in the simulation could be used as markers where the galaxies should be denser and there you generate more stars in realtime

At some point you will have to generate some stones, trees and other static meshes for you planets. Going to be impossible to generate everything in real realtime. Like in no mans sky there is travel time between systems when they generate such stuff.

LikeLike

Well it might be worth investigating but whenever I’ve done n-body stuff before it’s just been far too computationally expensive to use in this sort of context. Maybe for generating a single galaxy it would be fine, but there would be big problems when moving at high velocity between the galaxies…

Also note that the rocks on planet surfaces are actually all procedurally generated already, each one is unique! (although they are very similar). I’m doing my very best to not have any loading time for anything, so I’m exploring all possible options that are cheap to compute first. The sorts of thing I’m thinking may require some sort of preprocessing are things like rivers, and possibly galactic dust…

LikeLike

Galactic simualiton using n-body simulation is innefecient, super-cmputers can’t handle it.

Pre-computed simulations arent’s good enough too. So what do you do? Separate in layers; Outer layer, wich the group of stars is orbiting at lower speed than the inner layer, and intermediate layers. More layers, more things. About erosion, it’s litteraly impossible with a GTZ 1080 render and continental landscape erosion.

LikeLike

Indeed! This is pretty much why noise functions have to be used for everything…

Still haven’t decided if I’ll make the galaxies actually rotate yet… Although with this rendering method at least it would be trivial to animate the clouds rotating in a realistic sort of way.

LikeLike

In this scale, it’s simple. But, if you want, render the gas clouds as giant cubes, and make every cube move, then, add blur and some fancy things.

LikeLike

Well the clouds are all rendered in this version using inverted cubes… I haven’t tried making them move yet, and I’m not really sure how galaxy rotation can fit in with the rest of my universe at this stage (it is a much longer timescale than planet orbits). But I think it will be a nice thing to watch!

LikeLike